Tracking Performance Outcomes

Early in my career, I did a fair amount of work developing systems that track educational materials metadata. At first, this was simply out of my own interest and curiosity, and to explore the bounds of database capabilities. Later, when I joined the OpenCourseWare team, it turned out that the model I had been developing was almost an exact match for the system needed to track all of the educational materials that were to be built into the OpenCourseWare site. The system I built for that purpose, for better or worse, is still being used by the OCW team sixteen years on.

During my time at OPENPediatrics, I built a very similar system to track the educational materials there, which to my knowledge, is still in use also. I also worked a fair bit at OP on continuing medical education certification, as we offered that on many of the courses on the site. In the process of figuring out how to do that, I was exposed to the Accreditation Council for Graduate Medical Education Core Competencies, and spend some time thinking about the logical relationship between educational offerings and competency frameworks such as that one.

I realized that the connective tissue between the two was at the learning outcome level, and while achievement on learning outcomes could be rolled up to measure success in a course, they could also—if they were linked to relevant competencies—be rolled up across courses to provide a score of academic performance on competencies. While we never used it, I built a model in the OP database for doing just this. Using that model, you could take a student’s performance on any assessment given within the OP system (at the question-by-question level), track that score up through the associated learning outcome, the generalized skill it represented, and to the competency encompassing that skill. With this, you could generate a composite competency score.

The challenge in doing this with virtually all educational programs is that learning outcomes are largely implicit and it was a ton of work to sort out what they actually were and tie assessment questions to the learning outcomes they were intended to address, especially given the limits of my medical knowledge. It’s also tricky to normalize the scores on questions to a common scale, but this is more of a technical issue.

Now, at J-WEL, I’ve been working with a new competency framework that we’ll release soon, which addresses so-called 21st-century skills. At the same time, I am overseeing a team that is responsible for a fairly diverse and complicated set of tasks including event management, web publishing, a range of communication tasks, and customer service. Both from the perspective of understanding how the new competency framework can be used and from the perspective of managing the skills needed by my team and the opportunities for providing effective feedback, I found myself thinking again about issues of tracking competencies.

That, coupled with the years I have spent watching my wife develop very detailed rubrics for her high school English students and a fortuitous conversation with a colleague led me to the minor—and probably not original—epiphany that I could articulate pretty clear performance outcomes for my team with respect to major tasks they were being asked to execute (such as planning and managing our semiannual conference-type events). For each of these, I could then articulate a rubric for measuring the success of that outcome, and all of those outcomes could then be linked to the generic skills they required and on up to the relevant competencies (including the new 21st-century skills framework).

This would generate a handful of really interesting opportunities, including the chance to have nuanced discussions about what success with respect to particular tasks really looked like; to make feedback and performance evaluation less arbitrary and to tie it directly to performance on discrete tasks; to make that feedback more quantitative and less qualitative; and to target areas for professional development very discretely, by identifying areas for growth that might not be otherwise evident.

Long story short, given that developing databases is second nature at this point, I created a prototype, which I have been populating with performance outcomes and rubrics from my team’s actual tasks. Right now, it’s all an intellectual exercise, and it has all of the challenges you’d expect—getting the granularity right, dealing with outcomes that address multiple competencies, etc—but in general, it is less thorny than working with educational materials and seems to me manageable. Stay tuned for updates on how it’s working.

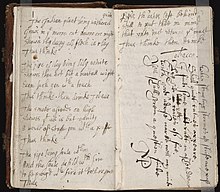

(Post image from Wikimedia Commons CC By-SA.)